A few years ago I heard about a project called Fourchette, which facilitated setting up one Heroku app per pull request on a project (aka review apps). I remember being all like THAT'S FREAKING BRILLIANT! Then I went back to whatever I was doing and never did anything about it.

Well, this week I finally had the time and inclination to get review apps working on Heroku. The instructions are out there, but they gave me enough trouble that I figured I'd document the gotchas for posterity.

#1. Understand the app.json file, really

We already had a tiny app.json file that we had created in connection with getting Heroku CI to run our test suite. All it had was an environments section that looked like this:

"environments": {

"test": {

"env": {

"DEBUG_MAIL": "true",

"OK_TO_SEED": "true"

},

"addons":[

"heroku-postgresql:hobby-basic",

"heroku-redis:hobby-dev"

]

When I started trying to get review apps to work, I simply created a pull request, and followed the dashboard instructions for creating review apps, assuming that since we already had an app.json file that it would just work. Nope, not at all.

After much thrashing, what finally got me over the hump was understanding the purpose of app.json from first principles, which didn't happen until I read this description of the Heroku Platform API. App.json originally came about as a way to automate the creation of an entire Heroku project, not just a CI or Review configuration. It predates CI and Review Apps and has been in essence repurposed.

#2. Add all your ENV variables

The concept of ENV variables being inherited from the designated parent app really threw me for a loop at first. I figured that the only ENV variables needed to be declared in the env section of app.json would be the ones I was overriding with a fixed value. Wrong again.

After much trial-and-error, I ended up with a list of all the same ENV variables as my staging environment. Some with fixed values, but most just marked as required.

"env": {

"AWS_ACCESS_KEY_ID": {

"required": true

},

"AWS_SECRET_ACCESS_KEY": {

"required": true

},

This won't make sense if you're thinking that app.json is specifically for setting up Review Apps (see #1 above.)

#3. Understand the lifecycle, especially with regards to add-ons

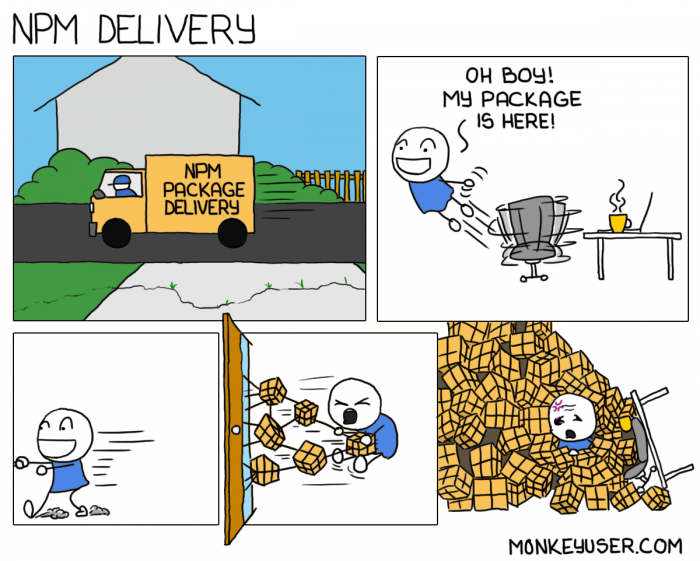

After everything was mostly working (meaning that I was able to get past the build stage and actually access my web app via the browser) I still kept getting errors related to the Redis server being missing. To make a long story short, not only did I have to add it to the addons section, but I also had to delete the review app altogether and create it again, so that addons would be created. (Addons are not affected by redeployment.)

"addons":[

"heroku-postgresql:hobby-basic",

"heroku-redis:hobby-dev",

"memcachier:dev"

],

In retrospect, I realize that the reason that was totally unclear is that my review apps Postgres add-on was automatically created, even before I added an addons section to app.json. (Initially I thought it was coming from the test environment.)

I still don't know if Postgres is added by default to all review apps, or inherited from the parent app.

4. Post deploy to the rescue

There's at least one thing you want to do once, every time a new review app is created, and that is to load your database schema. You probably want to seed data also.

"scripts": {

"postdeploy": "OK_TO_SEED=true bundle exec rails db:schema:load db:seed"

}

As an aside, I have learned to put an OK_TO_SEED conditional check around destructive seed operations to help prevent running in production. This is especially important if you run your staging instances in production mode, like you should.